Small picture about data structures

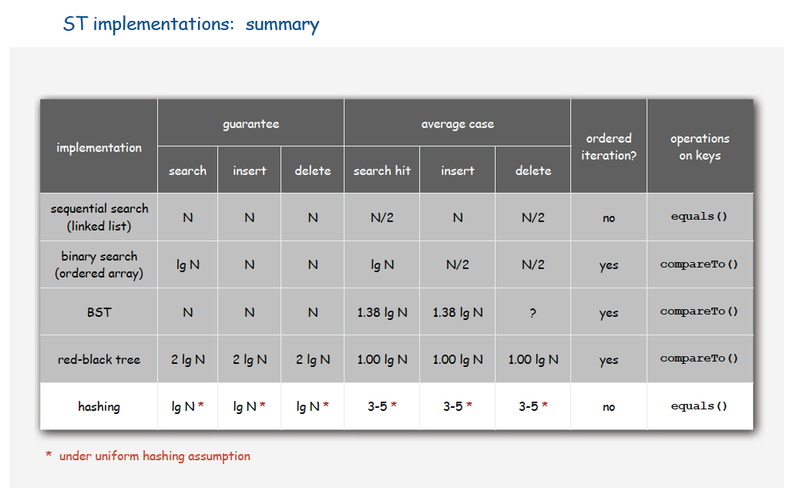

When I've posted the first time my article Java interview for a developer. Collections. Part 2 - Maps. there was a huge discussion about the fact that the worst time complexity of add, remove and search operations are equal to lg N, if hash-function steady placed elements in buckets. A lot of questions were 'why it looks so?'. I want to make notice about this point. This estimation was introduced by Robert Sedgewick in his course Algorithms. I will add here his slide from this course:

So we see that in the case of uniform hashing guarantee time complexity will be O(lg N), but in average case it will be O(1). I should notice that in case of not uniform hashing we could have time complexity O(N), cause we could have such hash function, that will place every element in one bucket, so we receive all elements placed in linked list in one bucket. There were not math proof of this statement in the course as far as I remember, but I suppose you can find it in paperworks of R.Sedgewick.